We have officially entered the “Wild West” era of the internet. Over the last few months, the conversation around Artificial Intelligence has shifted from “what can this tool do for us?” to a much more localized, private concern: “what is this tool doing to us?” While mainstream companies like Google and OpenAI have spent millions building “safety rails” to prevent their AI from creating harmful content, a parallel world of uncensored AI is growing rapidly.

The people who build these unfiltered models call it a win for open source and creative freedom. They argue that a person should be able to generate whatever they want on their own hardware without a corporation acting as a moral babysitter. But by early 2026, we are seeing that this “freedom” comes with a massive, unaddressed bill that the rest of society is being forced to pay.

The Problem of “Locked” vs. “Unlocked” AI

To understand the risk, you have to look at how these models actually work. A standard AI generator is programmed with “negative constraints.” If you ask it to create something violent or a fake image of a real person, it simply refuses. It has a built-in sense of digital ethics.

Uncensored AI is different. It is essentially the same powerful engine, but with the brakes cut and the seatbelts removed. These models are often modified versions of open-source software like Stable Diffusion, where developers have gone in and manually stripped away the safety filters.

The danger here isn’t just that people can make “edgy” art. The danger is that these tools are now decentralized. In the past, if a website hosted bad content, you could send a legal notice to get it taken down. But you can’t send a notice to a piece of software sitting on someone’s private hard drive in another country. Once an uncensored model is downloaded, it stays there forever; a permanent, private factory for generating whatever the user wants, regardless of who it hurts.

The Weaponization of Personal Likeness

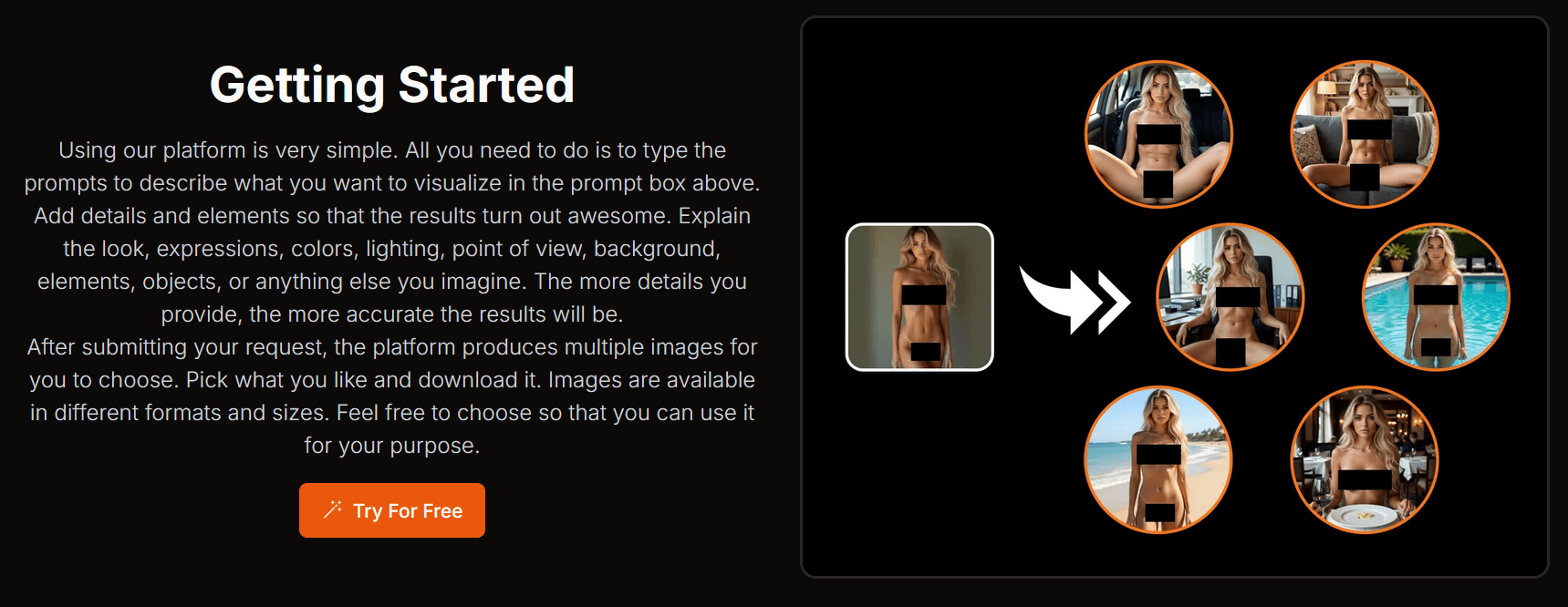

In 2025, we saw this technology move from a technical curiosity to a genuine social crisis. The rise of “nudify” apps, which use uncensored AI to strip clothing from photos of real people, is the most obvious example. These aren’t just tools for hackers; they are being used by ordinary people to bully classmates, coworkers, or ex-partners.

The ethical issue here is one of consent. When an AI is uncensored, it doesn’t recognize that a human being’s face or body is their own property. It treats every pixel as fair game. This has created a world where your digital identity can be stolen and manipulated in seconds, and there is almost no way to stop it once the image is out there.

Truth Decay and the “Liar’s Dividend”

If the first major risk of uncensored AI is the attack on individuals, the second is the attack on our collective reality. We’ve always known that photos could be edited, but we are entering a phase where the volume of fake content is so high that it’s creating a phenomenon experts call “Truth Decay.”

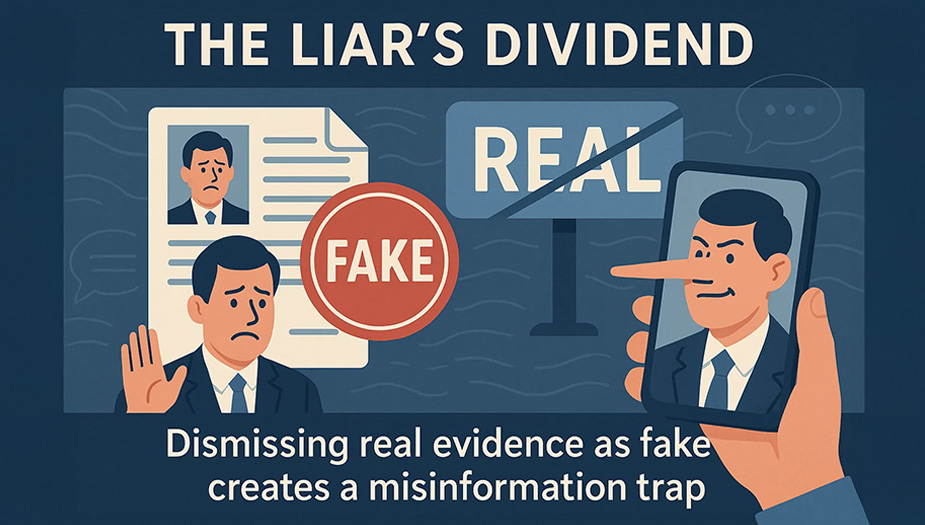

By early 2026, we’ve seen a strange and dangerous side effect of deepfakes: the Liar’s Dividend.

Here’s how it works. Because everyone knows that uncensored AI can create a perfect fake of anything (like a politician taking a bribe, a protest in the streets, or a scandalous phone call), public figures now have a “get out of jail free” card. Whenever a real piece of damaging evidence surfaces, they can simply point at it and say, “That’s just an AI deepfake.”

The mere existence of uncensored tools makes the truth itself debatable. We saw this play out in several local elections in late 2025, where genuine audio recordings were dismissed by supporters as “synthetic propaganda.” When you can’t trust your eyes or ears, you stop looking for the truth and start believing whatever fits your existing bias.

Geopolitical Misinformation at Scale

Uncensored models are particularly dangerous in the hands of state actors and professional trolls because they don’t have the “political filters” that mainstream AIs use. If you ask a “safe” AI to create a fake image of a world leader in a compromising military situation, it will refuse. An uncensored model will produce it in seconds.

This isn’t just a theory. In the summer of 2025, a record 10% of all fact-checked news articles globally involved some form of AI-generated content. These weren’t just low-quality memes; they were hyper-realistic “events” designed to trigger emotional responses and civil unrest.

- Tailored Disinformation: AI allows bad actors to create localized fakes. Imagine a world leader speaking in a niche regional dialect to endorse a local candidate.

- The Persistence of Doubt: Even after a deepfake is debunked, the emotional impact remains. Research shows that once people see a convincing image, they struggle to fully un-believe it, even when told it was fake.

- The Detection Gap: We are currently in an arms race we are losing. For every new deepfake detection tool that is released, a new jailbroken model is developed to bypass it.

We are moving from an era of “seeing is believing” to an era of “everything is potentially a lie.” This doesn’t just confuse the public but paralyzes them. When people don’t know what to believe, they often end up believing nothing at all, which is the ultimate goal of those who use these uncensored tools for harm.

The Rise of the “Abuse Machine”

In January 2026, the Internet Watch Foundation (IWF) released a report that sent shockwaves through the tech world. In 2025, they saw a 26.362% spike in hyper-realistic AI-generated videos showing child abuse. To put that in perspective: they found 3,440 of these videos in 2025, compared to just 13 the year before.

This isn’t happening by accident. It is a direct result of “safety-off” models that have been released into the wild. When a model is uncensored, it doesn’t just allow for “edgy” art; it becomes a tool that can be used to generate the most horrific content imaginable with a simple text prompt.

The “No Victim” Myth

The biggest ethical defense used by proponents of uncensored AI is the idea that “no real person was harmed.” They argue that if the child in the image is entirely synthetic (that is, made of pixels and math), then it isn’t a crime.

But this argument falls apart for three major reasons:

- The Training Data: AI doesn’t create out of thin air. To learn what a human looks like, these models are trained on billions of real photos. There is growing evidence that some open-source models were trained on datasets that included real abuse material, meaning every “synthetic” image is built on a foundation of real-world trauma.

- The Victim Identification Crisis: Police and child protection services are now being flooded with thousands of hyper-realistic images. They have to spend thousands of hours trying to figure out if the child in the photo is a real person who needs to be rescued or just a “synthetic” creation. Every hour spent on a fake image is an hour taken away from a real child in danger.

- Normalizing the Abhorrent: Experts warn that the availability of this material lowers the threshold for real-world crimes. It allows predators to practice or act out fantasies, which often leads to seeking out real-world victims.

A Legal Grey Area

We are currently in a race between technology and the law. While many countries have moved to ban “AI-generated CSAM,” the decentralized nature of uncensored AI makes enforcement almost impossible. If the software is running on a private computer, there is no central server for the government to shut down.

In late 2025, several US states passed laws that make the possession of these synthetic images a serious crime, regardless of whether a real child was involved. However, the technology is moving so fast that our courts are struggling to keep up. We are left with a terrifying reality: the tools to create this material are free, easy to use, and available to anyone with an internet connection.

The 2026 Turning Point: Regulation vs. Personal Responsibility

As we move through 2026, the “Wild West” era of uncensored AI is facing its first real reckoning. Governments are no longer treating this as a futuristic debate; they are treating it as a present-day infrastructure crisis. In the European Union, the full implementation of the AI Act by August 2026 is set to change the game. For the first time, developers of high-risk models will be legally required to document their training data and ensure their outputs aren’t amplifying the biases we discussed earlier.

But as we have seen, the decentralized nature of these tools makes local laws difficult to enforce. If a model is running on a private laptop in a country with no AI oversight, a fine from the EU doesn’t mean much. This is where the burden shifts from the regulators to the users and the platforms.

The Shift Toward Transparency and Labeling

One of the most significant shifts in 2026 is the move toward “Content Provenance.” Large platforms like Meta, X, and Google are beginning to implement machine-readable labels on every image. These invisible watermarks don’t just say “This is AI”; they tell a story of where the image came from.

- The Fight for “Human-Only” Spaces: We are seeing the rise of digital communities that strictly ban any form of AI generation, creating “verified human” zones.

- Corporate Accountability: After the Grok scandals of early 2026, companies are being pressured to move away from absolute freedom and toward accountable freedom, where paying subscribers must verify their identity before they can access the most powerful, uncensored features.

Conclusion: The Final Choice

We are at a crossroads. These Uncensored AI image generators offer a level of creative power that was unimaginable five years ago. They allow us to explore the furthest reaches of our imagination without a corporate filter. But we have to ask ourselves: is that creative “high” worth the price of a broken digital society?

The ethical issue isn’t really about the code; it’s about the people using it. As long as we value likes and clout more than consent and truth, uncensored AI will continue to be weaponized.

The risks are real: the deepfakes, the disinformation, the exploitation, and the automated bias. But the solution isn’t just a better algorithm or a stricter law. It’s a return to a simple, human principle: just because you can create anything, doesn’t mean you should. As we move forward into this AI-saturated future, our most important tool won’t be a faster GPU or a better prompt. It will be our own sense of empathy and responsibility.