Artificial Intelligence (AI) has changed many industries, bringing big changes in healthcare, finance, automation, and entertainment.

But AI also creates ethical issues when it is misused. A highly discussed innovation is undress AI tools, which modify images to make it look like people are not wearing clothes.

Some of these tools say they use advanced AI for realistic image changes. Still, their use raises many privacy, ethical, and legal questions. These tools have led to discussions about consent, online harassment, and digital rights, making them increasingly looked at around the globe.

In this article, we look at how undress AI tools work, their effects, the ethical issues they cause, and what is being done to stop their misuse.

How Do Undress AI Tools Work?

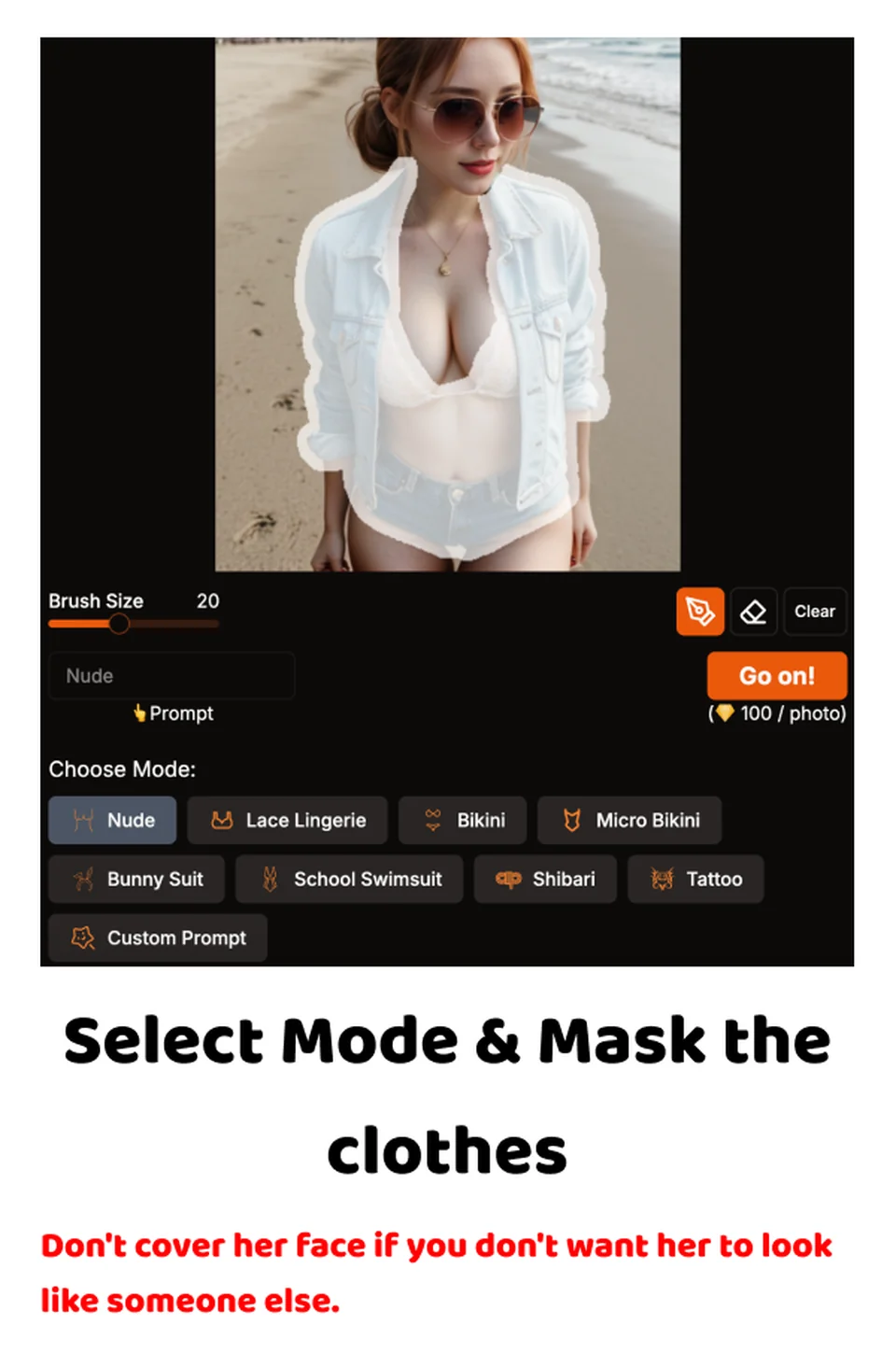

Undress AI tools use machine learning stuff to change images by taking off clothes and making fake skin textures. These tools use several AI methods, such as:

- Generative Adversarial Networks (GANs)

GANs have two networks—one makes altered images (generator) and one checks them (discriminator). They help each other make better fake pictures over time.

- Deep Learning and Neural Networks

Neural networks look at many human body images to learn about skin, muscles, and shapes. This helps the AI guess how to make changes that look like real life in an image.

- Computer Vision and Edge Detection

Smart computer vision techniques let AI find clothing edges, see fabric patterns, and guess what the body’s hidden parts might look like

- Real-Time Image Processing

Some tools for undressing need powerful computing systems to quickly create results without lagging. These also often rely on cloud-based models for good details. Even with all this tech progress, undress AI tools still have big problems. Many stills get skin color wrong; they can make textures that don’t seem real, light that’s weird, or body shapes that don’t match up correctly.

How Realistic Are AI Tools for Undressing?

The realism of AI undress tools depends on many things, like the smartness of the AI model, how good the training data is, and how strong the computers are. Some fancy tools say they can make almost real-looking images, but many still have clear mistakes.

Things That Change Realism

- Training Data Quality: AI models that use sharp, varied data usually make better pictures.

- Lighting and Shadows: Fancier tools try to copy normal lighting and shadowing on the skin.

- Texture and Skin Tone Match: Good AI images need to mix skin tones right and recreate natural textures well.

- Clothing Complexity: AI finds it hard with complicated clothes designs like layers or see-through fabrics.

- Pose and Background Effects: Busy poses or backgrounds can cause weird-looking results too often.

Even if some tools can change images well enough to look good at first glance, big problems still exist, making a lot of these AI-generated undress pics easy to tell apart when looked at closely.

Concerns About Ethical and Legal Issues of Undress AI Tools

The emergence of best undress AI tools has led to discussions about ethics and laws globally. Some think that AI should allow for creative uses, while others say these tools go against basic rights and promote online harassment.

- Privacy & Consent Problems

A key worry about undressing AI tools involves changing images without permission. Many people have suffered from deepfake tech, where their pictures are changed without their agreement, causing serious harm to their reputation and mental health.

- Risks of Cyber Harassment and Blackmail

Images made by AI showing nudity are often used in cyberbullying, revenge porn, and blackmail situations. The easy availability of these tools makes digital abuse worse, especially impacting women and public figures more significantly.

- Use of AI Ethics & Moral Limits

Experts on AI ethics caution that using these kinds of tools incorrectly creates a bad example for future technology use. When tech is turned into a weapon for harmful purposes like slander or image alterations without consent, it threatens the responsible use of artificial intelligence.

- Government Actions & New Laws

Countries across the globe are working to create stricter rules against the misuse of explicit content created by AI technologies. Numerous nations have made laws against deep fake pornography, with penalties applied for sharing altered images made without consent.

- The USA has passed anti-deepfake laws targeting those making or sharing fake harmed images as part of harassment efforts.

- The UK’s Online Safety Bill criminalizes unauthorized explicit content generated by AI.

- EU regulations demand that companies creating artificial intelligence put measures in place to prevent such misuse and keep track of generated material.

Even so, enforcing these rules is difficult due to the continued presence of undressed AI tools on hidden web markets and unchecked internet sites.

How to Find and Guard Against AI-Made Undress Pics

As tools of AI get better, it’s very important to make ways that help spot fake images and keep people safe from digital misuse.

- Tools for Finding AI

Some groups are making smart tech tools to find deep fake images. These tools look at pixel errors, lighting problems, and weird faces to spot fakes.

- Searching Images Backwards

Places like Google Reverse Image Search let people see if messed-up versions of their photos are out there online.

- Making Privacy Stronger

People can reduce risks by tightening settings on social networks so fewer strangers can see their private pictures.

- Legal Actions and Reporting

Those hurt by AI-made undress pics should tell the police and use legal paths to remove fake stuff from web pages. Many sites now have strict rules against this kind of abuse.

- Informing People & Using AI Right

Groups fighting for rights and tech firms need to join forces to teach everyone about the ethical use of AI, staying safe online, and how to responsibly share AI-made content.

The Future of AI and Ethics

AI tech for changing pictures grows fast, bringing cool chances but big problems. While it’s good for things like health scans, art stuff, and design on computers, there’s bad use too with fake nudity tools and deep fakes that raise big ethical issues.

Frequently Asked Questions

- Should There Be Limits on AI Uses?

People argue about whether we need rules for AI. Some smart folks want stricter regulations; others say too many rules could kill new ideas.

- How to Use AI the Right Way?

Tech builders and rule-makers must set ethics rules to make sure AI helps people while keeping risks low.

- Using AI in Online Security

AI should not only be for making fake images, but also for catching online bad acts. The same tech that makes fakes can help find and get rid of harmful stuff on the internet.

Conclusion

Realistic undress AI tool-making shows both the strong and bad sides of AI tech. As machine learning and deep fake stuff grow, so do the ethics and laws around using them. AI is like a sharp knife—when used rightly, it can help businesses and better lives, but when used wrong, it can hurt privacy, take advantage of people, and add to cybercrime.

As AI keeps moving forward, it’s crucial for governments, makers, and users to focus on the right actions like ethics, consent stuff, and legal rules to make sure AI stays useful, not harmful. How we deal with these issues will shape the future of AI in responsible ways.