With the advancements in Artificial Intelligence (AI), images can now be made from text, photorealistic editing, and even the creation of artwork. However, despite the benefits that this technology presents, it also has its own dangers. For the technology not to be abused, the tools that use image censorship or safety filters have been developed. This makes sure that the images produced or shared would be safe and legal.

Censorship in AI images simply refers to regulating the nature of the images an AI system must be able to create, display, or edit. This means that without regulations, AI would be developing dangerous, offensive, or even illegal images. That is why companies are investing heavily in their safety features in AI.

Why Is AI Image Censorship Important?

Safety filters protect:

- Children and teenagers

- Public morals and social values

- Personal privacy

- Laws and ethical standards

These rules help AI remain a helpful tool instead of becoming dangerous.

What Are Safety Filters?

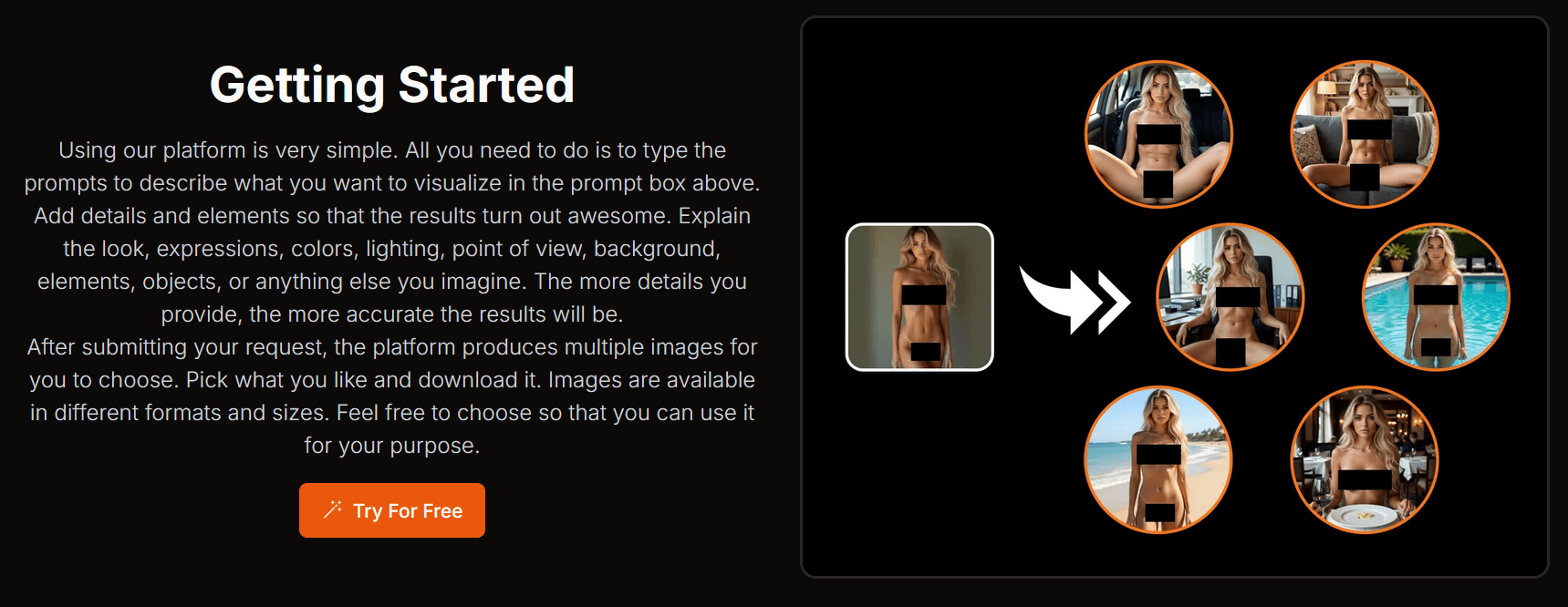

Safety filters are rules and detection systems that decide whether an image request or result is allowed. They work before and after an image is generated.

There are mainly two types:

- Input filters check what the user is asking for

- Output filters check the image before showing it

If something breaks the rules, the AI will block the request, blur the image, or refuse to generate it.

How AI Sees Images and Requests

AI image systems learn from millions of images and descriptions. The safety level, either safe or unsafe, is labeled when the images are developed. The AI system is trained using patterns, which include:

- Nudity or sexual material

- Violence or weapons

- Hate symbols or abusive gestures

- Faces of actual individuals (to prevent deep fakes)

When you enter a task, the AI reads the text with the help of language models. It checks for dangerous words or indirect meaning. When it finds that the task is dangerous, it halts.

In the case of the request succeeding, the AI generates the image while verifying it before displaying it to you.

AI Image Censorship example

“Create an image of a real celebrity doing something illegal.”

The safety system will block it because:

- There’s a real-life individual

- It can harm reputation

- It contains false information

In that case, the warning could be a notice on the computer screen or a suggestion to reformulate the question.

Various forms of AI image safety filters

AI image censorship involves multiple layers of safety rather than just one filter. Each of these layers handles its own specific role, making sure that each piece of harmful content is stopped as early as possible. Let’s break them down in simple terms.

Text Prompt Filtering

Text filtering is the first step. In case of typing of any request, AI checks the words and meaning. It looks for:

- Sexual or adult language

- Acts of violence

- Hate speech or harassment

- Illicit activities

Requests that include real people

Advanced AI processes the intention of what one has said, even if the words have been changed or masked. For instance, it can detect the usage of symbols or slang instead of direct words.

If the system detects a problem, it could

- Deny the request

- Ask you to rephrase

- Safety warning

- Image Generation Control

The passage of the text ends, and an image is generated by the AI. However, work does not end there. The image goes through an analysis before reaching the user.

AI employs image recognition algorithms to search for

- Nudity or inappropriate body parts

- “Extreme violence or blood?”

- Hate signs or symbols

- Dangerous/disturbing or scary content

If the image fails the requirements, it will be blocked or replaced with a blurred version.

Face and Identity Protection

Among the most crucial safety regulations, it is necessary to protect real individuals. A face is identified. Misuse is prevented.

AI image censorship prevents the following:

- The creation of synthetic images of real individuals

- Editing someone’s face in dangerous situations

- Creating DeepFake-style images

This shields celebrities as well as individuals from being wrongly accused, scammed, and having their identities stolen.

Cultural and Regional Rules

The WIT

Safety filters also vary depending on the culture and legal systems. What may work in one place may not be acceptable in other countries.

For instance:

- Some symbols may be deemed offensive or unacceptable in certain cultures

- Data privacy statutes vary across nations

- Age limits differed globally

Companies that specialize in AI adhere to global standards to remain responsible.

Human Review & Feedback

AI is very powerful, although not perfect. This is why the involvement of the human review component comes in. Once the complaint is lodged by the user, the situation is vetted, and the filters are updated.

User feedback is helpful to the

- Corrections needed

- Decrease false blocks

- Strengthen Next-Generation Safety Standards

Gradually, these corrected results enable artificial intelligence to improve accuracy.

Limitations of AI Image Censorship

Though filters work well, sometimes artificial intelligence can go wrong. Either innocent pictures will be filtered out, or dangerous ones will be allowed. They are continually trying to improve the technology so that fewer mistakes occur.

The aim is balance between being creative and ensuring user safety.

The Future of AI Image Censorship

The more advancement there is in AI technology, the smarter the image censoring systems will become. Future image filtering systems will be able to comprehend the meaning of images and the intentions of the users. This will result in less confusion and accuracy.

One key area of enhancement is going to be the detection process itself, which is going to happen instantly and even more accurately by the AI. This is especially helpful to prevent harmful images from being formed even. Another area is definitely going to be fairness.

Additionally, companies involved in artificial intelligence are focusing on the issue of transparency, which means that the user will be able to understand the reasons behind declining a particular request.

However, there must always be some challenge. Excessive censorship can be stifling to creativity, while overly lax censorship can be detrimental. This is why game developers strive to achieve the optimal level of censorship.